Recently we learned that the App Store Freedom Act may be considered as part of the House Energy and Commerce Committee’s kids’ digital protection package being considered once the government shutdown is over. We are delighted that Congress is considering options aimed at improving online child safety. But the App Store Freedom Act would do the opposite.

Trusted Future has previously explained the many problems with the bill, including how it would fundamentally undermine the privacy, safety and security features that have made modern mobile devices so useful. We’ve also outlined how Congress can avoid learning and replicating the wrong lessons from Europe’s failed Digital Markets Act (DMA) experiment – which this bill replicates.

But a group of lobbyists are now claiming that the App Store Freedom Act “would promote online child safety” – an argument they are making to get the bill considered alongside other important child safety legislation. The problem is, rather than promoting online child safety, it unleashes a host of new and substantial child safety risks.

Congress should be learning from, not repeating the mistakes of Europe’s Digital Markets Act (DMA) which has been a disaster for children’s safety. The App Store Freedom Act, like Europe’s DMA, includes far-reaching requirements aimed at creating a regulatory-imagined EU-like marketplace of ‘competition’ by breaking down the so-called “walled garden.” But in doing so they are actually breaking down the walls and safeguards used to protect our kids, and creating unguarded side doors and unregulated back doors that let bad actors harm kids and exploit parent’s trust. Like the DMA, it would deny users the choice of choosing the most safe, secure, and privacy-friendly mobile ecosystem. It would actually limit, not enhance, market competition.

As has been shown through Europe’s failed DMA experiment, this type of ex-ante framework breaks core child protections that device manufacturers build into mobile phones. As a result, this legislation could seriously damage kids safety online:

- It would expand children’s access to harmful, adult and pornographic apps; undermines children’s privacy by providing third parties with access to a child’s sensitive private notifications;

- It prevents parents from holistically protecting their kids by limiting the functionality of popular parental controls;

- It expands access to some of the most addictive technologies aimed at children.

Moreover, we found in Europe that their identical framework instead makes it harder for app developers to actually compete and reach global markets – so it’s not actually better for competition, despite what the bill’s proponents claim.

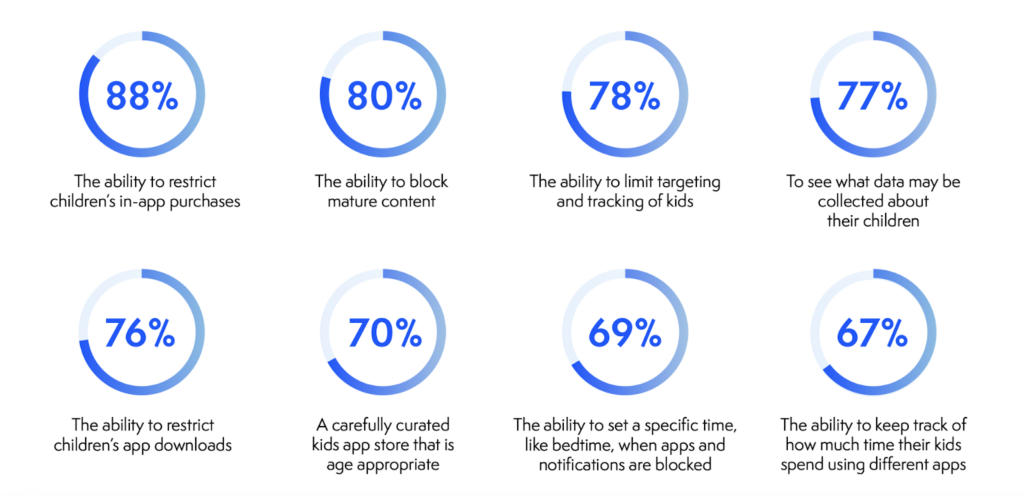

To better understand the challenges that parents are confronting today, Trusted Future conducted a comprehensive national survey of parents’ attitudes about technology. We found an overwhelming majority of parents (91%) want the apps they download onto their devices to be reviewed by experts for privacy and safety before they are made available to the public – something the App Store Freedom Act prevents. And 75% of parents would be concerned if device makers were required to allow apps that had not been reviewed by experts to be available for download – as the bill does. Parents overwhelmingly (90%) are concerned about protecting their children’s privacy, identity and safety online. We also found parents’ top priority from policymakers (63%) is adopting strong baseline privacy protections for children – and the App Store Freedom Act would make these baselines worse.

Expanding Children’s Access to Adult, Harmful and Pornographic Material

Today’s children are the most connected generation in history, but also some of the most vulnerable users of new technologies. Experts have found that limiting children’s access to pornographic and other adult content is critical to their well-being. Given the scope of adult content, both major mobile app governance systems have long implemented app store review and governance systems to prevent such access.

Apple’s App Store has long rejected illicit and pornographic apps through its terms of service and never allowed a pornographic app onto its platform through its more than 15-year history. Google’s Play Store likewise prevents apps containing or promoting pornography.

However, Section 2(a) of the App Store Freedom Act requires mobile devices to allow adult apps and a host of other potentially harmful apps to be installed without core safeguards. In Europe, a nearly identical provision now requires that iPhones give European users expanded access to pornographic, illicit, and related adult apps – including new hard-core pornographic distribution apps through third party app stores – and do so in a way that gets around built-in device level parental controls.

After Europe passed the DMA and enabled third party app marketplaces, one of the very first apps that premiered through AltStore PAL is Hot Tub – the world’s first native iPhone porn app. It is now available throughout Europe and allows children and other users to search for and view pornographic videos from multiple online sources.

This digital disconnect happens because the App Store Freedom Act, like the DMA, prohibits device producers from restricting apps for content purposes that are made available outside of official app marketplaces. If passed, the App Store Freedom Act would likewise mean that, for the first time, mobile app marketplaces in the U.S. would no longer be able to screen for, prohibit, or prevent access to apps that distribute pornography. It would even require mobile devices to allow apps that deceive users on their intent, or switch functionality after downloading, without mandating an option to remove that app.

Trusted Future’s survey found parents strongly support the parental safety tools that companies have built into their app stores, with 80% supporting the ability to block mature content. And 75% of parents say they would be concerned if device makers were required to allow apps that had not been reviewed by experts – as would be required by the App Store Freedom Act.

Limiting the Functionality of Popular and Effective Parental Control Tools

Today’s two major mobile app marketplaces have established a robust set of tools and safeguards in order to protect children. These tools allow parents to set time limits for how long children can spend with specific apps, block mature content, automatically detect and block explicit content, restrict in-app purchasing, set limits on who their kids can chat with, limit apps that attempt to track their children’s online activity, and allow parents to approve app downloads. These trustworthy parental controls are being used to reinforce good habits and create safer online spaces for kids to learn and play. They are popular and effective. According to a survey by the Family Online Safety Institute, 87% of parents already use tech tools to oversee their children’s digital lives.

But instead of building upon these popular tools, the App Store Freedom Act, like the DMA, unintentionally requires platforms to undermine and degrade these parental controls.

For example, today in the U.S., parents can utilize and rely upon platform-level app store enabled tools to: 1) enable easy access to every app’s age rating on an app store download page, 2) prevent children from buying or downloading apps onto their phones without parental approval, and 3) block mature content. But because of the DMA, none of these tools are now universally available in Europe across the platform or able to assure parents that their children are appropriately protected.

It means the platform and governance system for the official app store can no longer implement parental tools that block a child’s access to an age-inappropriate sideloaded app that may be sent to a child by a link from a friend or stranger. It also means a platform no longer has the ability to prohibit or prevent age-inappropriate apps that distribute pornography or encourage consumption of tobacco, vape products, illegal drugs, or excessive amounts of alcohol, even on a child’s phone.

Our Trusted Future parental survey found that parents strongly support the parental safety tools that have built into their mobile app stores to protect their children.

Undermining Children’s Privacy by Providing Third Parties with Access to Sensitive Information about Our Kids

A phone’s notifications (the bubbles that pop up on your screen) are an integral part of the way everyone uses smartphones, helping us stay informed about messages, calls, weather alerts, events on their calendar, and more at a glance. However, because of DMA interoperability mandates, those same notifications in Europe are now allowed to be intercepted and used to put private data at risk – including on phones used by children.

The App Store Freedom Act’s interoperability provisions, like the DMA’s interoperability requirements, undermine key privacy protections and require mobile device providers to share sensitive user data with others. For example, the DMA’s interoperability framework requires smartphones to provide third-party devices the content of all smartphone notifications – giving data-hungry apps the opportunity to hoover up all of a child’s personal and private notifications in an unencrypted form to be sent to its servers. This unprotected data could be mined, used by surveillance marketers to target ads to underage minors, or sold to unscrupulous third parties without restriction.

This comes when 73% of parents are concerned about personal data being collected by third parties, without their consent, according to Trusted Future’s survey of parents. The App Store Freedom Act is built upon the same misguided interoperability framework and imports the same privacy flaws as Europe’s DMA. It also fails to include any provision to safeguard children’s privacy, safety or security.

Why is it that the text of the App Store Freedom Act never even mentions the need to protect privacy, safety, or security? Why doesn’t it include safeguards that would allow harmful apps to be screened before they get to our kids? Why doesn’t it include protections for popular parental controls, or enable app store governance mechanisms that would restrict the kinds of apps that can be distributed to minors? These are important questions for policymakers.

As social media plays an increasingly pervasive role in their children’s lives, many parents are worried that their children’s privacy, safety and well-being is at risk.

Protecting children’s data privacy and safety should be an urgent and collective imperative. But policymakers need to be asking the right questions so we get to smart, effective, trustworthy solutions that protect privacy and enable tools that were built with child online safety in mind.

To advance a more trusted digital future, we need to reject proposals like the App Store Freedom Act that take away critical online safety protections – and instead advance pragmatic solutions that actually improve online child safety.